Sashay? Shantay!

A first look at the EU’s DSA transparency database

Introduction

After analyzing American companies’ transparency disclosures about online child sexual exploitation, I am now turning to Europe, specifically the European Union’s Digital Services Act (DSA) transparency database. It collects so-called statements of reasons (SoR) for content moderation decision by digital service providers. In theory, said database should be a wellspring of insight into content moderation by the tech industry. After all, all but very small providers need to report every individual action on content as well as accounts, and they need to do so “without undue delay,” i.e., order of days.

Brussels, We have, Uhm, Problems!

In practice, well, we need numbers to track all issues:

Lack of documentation: Somehow, the EU has managed not to document the most important part of its database schema, the categorization of content moderation decisions into coarse and fine labels, i.e., those starting with

STATEMENT_CATEGORYandKEYWORD, respectively. For instance, what exactly are “unsafe challenges”? According to the documentation, they are related to the protection of minors. But that’s the extent of public material about this keyword. I couldn’t find anything else. WTF? 🙁Incomplete database entries: Every service provider must submit a record to the database for every content moderation decision, including when the moderation resulted in demotion instead of content removal. They also must do so in a timely manner. That’s great. But the required information is incomplete. Notably, fine labels are entirely optional and only 3.5% of entries include them. That renders 96.5% of database entries less than useful. 😣

Closed source development practices: The European Commission is only pretending to develop the necessary software in the open. Its only GitHub repository has issues disabled, and its only GitLab repository has only two contributors who made 13 commits over 4+ months of the repository’s existence. By comparison, I made 206 commits over the exact same time range on one of my projects and shantay, the alternative tool I’m developing, saw 58 commits during its first week. 😫

Inappropriate license: The EU’s Python-based command line tool doesn’t look terrible, but its license gives me serious pause. The EUPL is the EU’s very own copyleft license, roughly comparable to the GPLv2. Given that the majority of projects nowadays use the far more permissive MIT or Apache 2.0 licenses, using a relatively obscure copyleft license for public interest tooling seems like a poor choice to me. 😭

Buggy distribution: Three CSV files in the distribution of zipped archives of zipped CSV files,

sor-global-2024-08-29-full-00030-00002.csv,sor-global-2024-09-13-full-00011-00000.csv, andsor-global-2024-09-14-full-00046-00001.csv, contain coding errors such as invalid and overlapping combinations of quotes escaped as""and\". Not surprisingly, they trip up Pola.rs’ and PyArrow’s CSV parsers. Additionally, CSV files use empty strings as well as[]to encode empty lists. While technically valid, if not careful, the latter parses as a list with a singlenulland hence must be filtered out before. 😡Limited data availability: According to the EU’s data retention policy, “after 18 months (540 days), the daily dumps are removed from the data download section and are archived in a cold storage.” It is unclear what motivates the choice of 18 months, which seems awfully short for a public interest resource. Worse, the database started operations on , and so the first daily archives are about to vanish into the Arctic Circle. 🤯

Hostility towards feedback: The feedback form for the DSA transparency database appears to have been designed to minimize the receipt thereof. First, it requires registration for an EU account. Second, text input is limited to 500 characters, which one discovers only after a rejected submission. That isn’t quite the forced terseness of X (280) or Bluesky (300), but it is no better than Mastodon (500)—all of which qualify as microblogging platforms. In a plainly bizarre twist, a separete form for asking questions does not require registration and accepts up to 3,000 characters. 😵💫

Oof. That doesn’t sound so good. Sashay away!

Introducing Shantay

To better explore the DSA SoR DB, I am writing my own Python tool, shantay. Unlike the EU’s tool, shantay is being developed in the open, as open source, with a permissive license. Like the EU’s tool, it automatically downloads daily CSV archives, extracts category-specific SoRs into parquet files, and then analyzes the extracted data. The EU’s implementation of that functionality is fairly general, based on YAML configuration files. It also relies on Spark for data wrangling. That does ensure scalability—if you can afford the necessary cluster and live with the attendant complexity (or are paid to do so). By contrast, shantay is positively scrappy and makes do with what you can comfortably spare. A laptop and 2 TB Samsung T7 drive for long-term storage will do. Shantay also doesn’t scale. In fact, it may take a couple of days and nights to download all of the DSA SoR DB and extract all working data. While slow, it sure works for me.

Making Do With Less

At the same time, making do with less presents its own challenges. The first

are interruptions. Maybe, the humming of a computer busy saturating both disk

and network is getting to you. Maybe, shantay has a

bug or two. In either case, shantay’s download of the DSA

SoR DB is almost certain to be interrupted, probably

even more than once. Hence, shantay is careful not to lose (too much)

work upon such interruptions. It clearly separates the current working

directory, "staging," from the directories used for storing zip archives and

parquet files. It performs incremental updates only in staging and otherwise

bulk copies files in and out of long-term-storage directories. While

shantay keeps helpful metadata in a meta.json

file, it also knows how to recover mission-critical information by scanning the

file system and merging partial contents of that file.

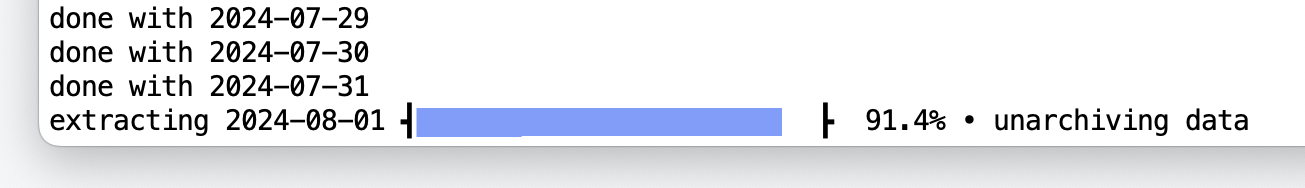

The second challenge is keeping humans in the loop. That includes satisfying quick checks on the tool’s progress as well as more thorough post-mortem inspections to determine how far the tool got and what went wrong. For the quick check variety, shantay keeps updating a status line on the console. For longer lasting tasks, such as downloading a release archive, it includes a progress bar on the status line. It even computes the download speed and includes it next to the progress bar. Here it is busy converting from CSV to parquet:

For the more thorough inspection variety, shantay keeps a persistent log on disk. The log format represents a compromise: It is structured and regular enough to be easily parsed by software, but also unstructured enough to still be human-readable. For example, with ‑v for verbose mode, shantay logged:

2025-02-28 06:23:44 [DEBUG] extracted rows=709, using="Pola.rs with glob", file="sor-global-2025-01-31-full-00056.csv.zip"

Apparently, shantay was busy at 6:24am extracting 709 rows of data using the fast path with Pola.rs parsing all CSV files contains in the named archive in one operation.

The third challenge is fully utilizing what is available in terms of hardware, notably, CPU and RAM. That excludes not only Java-based Spark but also Python-based Pandas. The latter certainly is popular. But it isn’t particularly fast and also suffers from an unwieldy and inconsistent interface. Instead, shantay builds on Pola.rs. This data frame library has a simpler and cleaner interface than Spark or Pandas. Its core is written in Rust, which ensures good performance. With a little help from PyO3, it also integrates seamlessly with Python. Using Pola.rs, shantay chugs through a month’s single digit gigabytes of working data, loaded from hundreds of parquet files with a single line of Pola.rs using two wildcards, and takes maybe 30 seconds to process 1.5 years worth of data. Not bad. Then again, on my desktop, shantay currently takes about an hour to ingest 16 days of CSV files, assuming that the data has been downloaded already. Since that doesn’t saturate CPU nor disks, parallelizing should help, and I have already written much of the code for doing so.

Sharp Edges and Papercuts

In my experience, there is one Pola.rs feature that just isn’t worth the trouble, namely automatic schema inference when reading CSV files and also when directly instantiating data frames. The feature isn’t even unique to Pola.rs. Pandas also performs schema inference and Pandas’ inference has also tripped me up. Alas, Pola.rs’ schema inference seems more brittle, and its error messages, without any line numbers when reading CSV files, are positively rude. Pola.rs’ inference also doesn’t handle nested list values, which appear in several DSA SoR DB columns.

The solution is to disable automatic inference when reading CSV

files, setting infer_schema to False, while also

specifying types for unproblematic columns with schema_overrides

and using Pola.rs’ string operators to clean up more problematic columns before

explicitly converting them to their proper types. While this approach does

require a bit more code even for columns that don’t require cleanup, it also has

proven to be robust. It also simplified integration of a second CSV parser into

shantay because the code necessary for converting the

CSV parser’s output to a typed representation was already written. Alas,

disabling automatic inference when manually constructing a data frame is a bit

more involved than setting a Boolean option: You need to define a schema that

maps all columns to strings.

Integrating a fallback CSV parser became necessary when Pola.rs’ CSV parser choked on the encoding errors described above. While looking for a work-around, I tested other CSV parsers on the first offending file. PyArrow’s CSV parser failed as well. However, the Python standard library’s CSV parser succeeded. It also preserved all of the original text, minus the bad escape sequences. I had my fallback and implemented the following three-level CSV parsing strategy: By default, shantay uses Pola.rs’ CSV parser with a wildcard in the file name, reading several CSV files in one operation. If that fails, shantay tries to read the CSV files again, with the same parser but now one file at a time. When that fails on one file, shantay falls back to the Python standard library’s CSV parser. Once implemented, shantay processed the archive with the first broken CSV file without a hitch. I only learned of the other two files from its log.

Five Eudsasordb 🤓 Timelines

The proof of the pudding is, of course, in the eating. So here are five timelines I produced with shantay. Each covers the exact same time period from to but illuminates a different aspect of the database and its contents. Also, the first three timelines graph daily data and the remaining two monthly data.

Doing content moderation well is hard at today’s scales. On busy days, protection of minors alone may account for 500,000 plus content moderation actions across Europe alone.

At the same time, protection of minors is not a particularly prominent category for content moderation. Out of 15 categories currently used by the DSA transparency database, protection of minors accounts for only 0.3% of all content moderation actions.

Digital service providers largely treat optional compliance requirements as non-existent compliance requirements. I’m sure you are as shocked as I am about this realization. Still, the numbers are stark: Overall, only 3.5% of all SoRs include keywords. Also, for protection of minors, that fraction, at 9.5%, is almost three times larger and seems to be increasing, which is unexpected.

The platform determines whether to include keywords or not. Out of the 35 platforms that have contributed SoRs concerning the protection of minors, only a third, i.e., 12 platforms, filed SoRs with keywords. That also means that any analysis that focuses on SoRs with keywords only is likely to yield biased results.

At least for protection of minors, there are vast differences between, say, twelve- or thirteen-year-olds sneaking onto social media before their time, keyword age-specific restrictions, teenagers sexting each other, keyword CSAM (child sexual abuse material), and adults exchanging child pornography, also keyword CSAM. As the example illustrates, if anything, the current keywords are not fine-grained enough. The data reflects that too, with a large fraction of SoRs marked as

KEYWORD_OTHER.

In summary, the data that is readily available today consistently demonstrates the need for a more fine-grained classification of content moderation. That is particularly important for a category such as Protection of Minors that currently erases the difference between benign and arguably healthy activities, notably teenagers consensually sexting amongst themselves, and rather odious, criminal conduct such as child pornography. Making existing keywords mandatory would improve matters, but still is not granular enough.

Sashay Away?

Me and shantay will be mining the data for more insight for sure. Hopefully, we can work around some of the limitations with what’s currently available. I might have an idea or two already. Along the way, I’m going to continue improving shantay. In fact, I just made the first release. Its support for extracting categories other than protection of minors is still very raw and shaky. But with data retention expiring towards the end of the month, releasing early seemed the right approach.

Meanwhile the EU would be well-advised to up its transparency game. Unfortunately, the current effort reeks of transparency theater, producing large volumes of daily data that end up meaning not that much. My recommendation is to start with mandating keywords. Without them, the DSA transparency database would seem doomed to decline and eventual failure. The second priority should be documenting categories and keywords in more detail. Not having done so is a grave oversight. Once those two priorities have been addressed, a consultation across industry and academe on additional keywords would be great. Of course, there’s also the question of open source development…

With the US government preoccupied with its own dismantling while also cosying up with genocidal thugs, the EU has become the last remaining torchbearer for democratic, rational, and, yes, also technocratic government. But that requires that the EU deliver on efforts such as the DSA transparency database. After all, it is one of its more visible and ambitious efforts of late. So European Commission, how about it?